Conversion Rate Formula & Tips for Ecommerce Stores

7 min read time

Published on Jan 24, 2022

Written by Devin Pickell

As an ecommerce store, your conversion rate for sales is one of the most important metrics you’ll track.

Knowing your conversion rate of product categories, product bundles, and even individual items can give you tons of insight into what your customers prefer. You can also measure the success of sales or marketing campaigns using conversion rate.

For example, you can see if one email marketing campaign had more success than another based on the amount of sales divided by the total clicks or visits.

This article will help you measure your conversion rate using a basic formula, plus we’ll offer quick tips for improving your store’s conversion rate.

How do you calculate conversion rate?

To calculate conversion rate, take the number of conversions and divide it by the number of total clicks or visits. For example, if your latest marketing email had 1,000 clicks and 30 sales, your conversion rate would be 3%.

Conversion rate formula

Conversion rate = (conversions / total clicks or visits) * 100

Knowing your conversion rate is crucial to shaping the strategy of your sales and marketing campaigns. After all, how would you know what to change about an email or popup if you don’t even know how it performed?

What is considered a good conversion rate?

In the world of ecommerce, a 2% conversion rate could be considered average. Being able to double or triple a 2% conversion rate would mean your store is in good shape. However, conversion rates may differ based on the industry you’re selling in. Below is a chart with average conversion rates broken down by industry, from Omni Convert:

| Industry | Conversion rate range |

| Arts and Crafts | 3.84% - 4.01% |

| Electrical and Commercial Equipment | 2.49% - 2.70% |

| Pet Care | 2.51% - 2.53% |

| Health and Wellness | 1.87% - 2.02% |

| Kitchen and Home Appliances | 1.61% - 1.72% |

| Home Accessories and Giftware | 1.46% - 1.55% |

| Cars and Motorcycles | 1.35% - 1.36% |

| Fashion, Clothing, and Accessories | 1.01% - 1.41% |

| Sports and Recreation | 1.18% |

| Food and Drink | 0.90% - 1.00% |

| Baby and Child | 0.71% - 0.87% |

| Agriculture | 0.62% - 1.41% |

How to improve your conversion rate with Privy

Now that you know how to calculate conversion rate, we’re going to show you a few ways Privy can help you improve it.

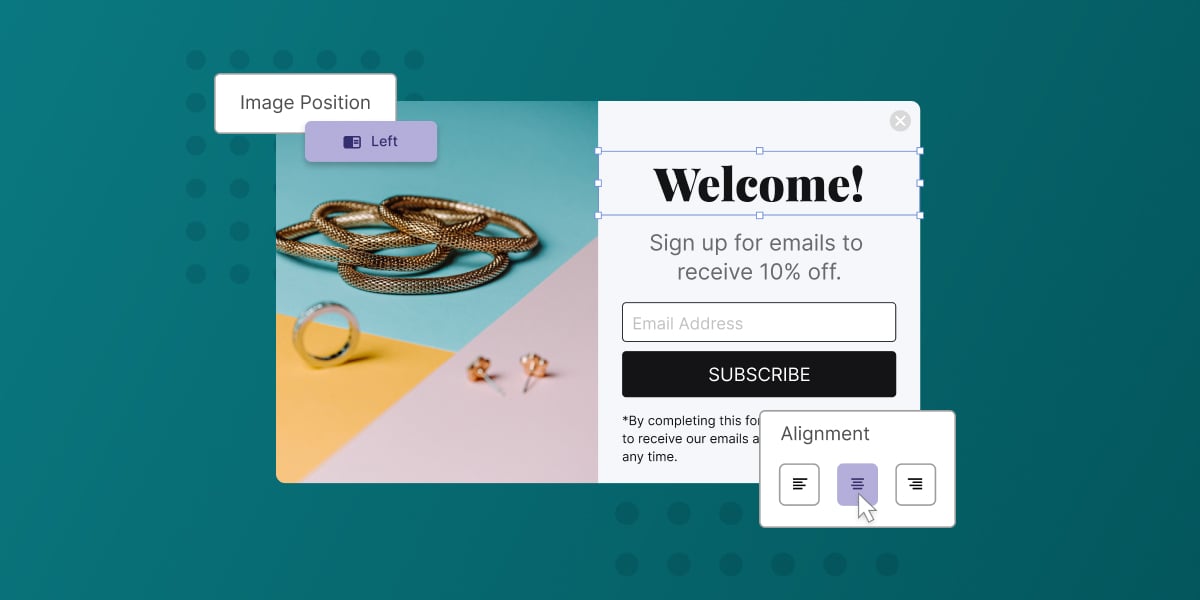

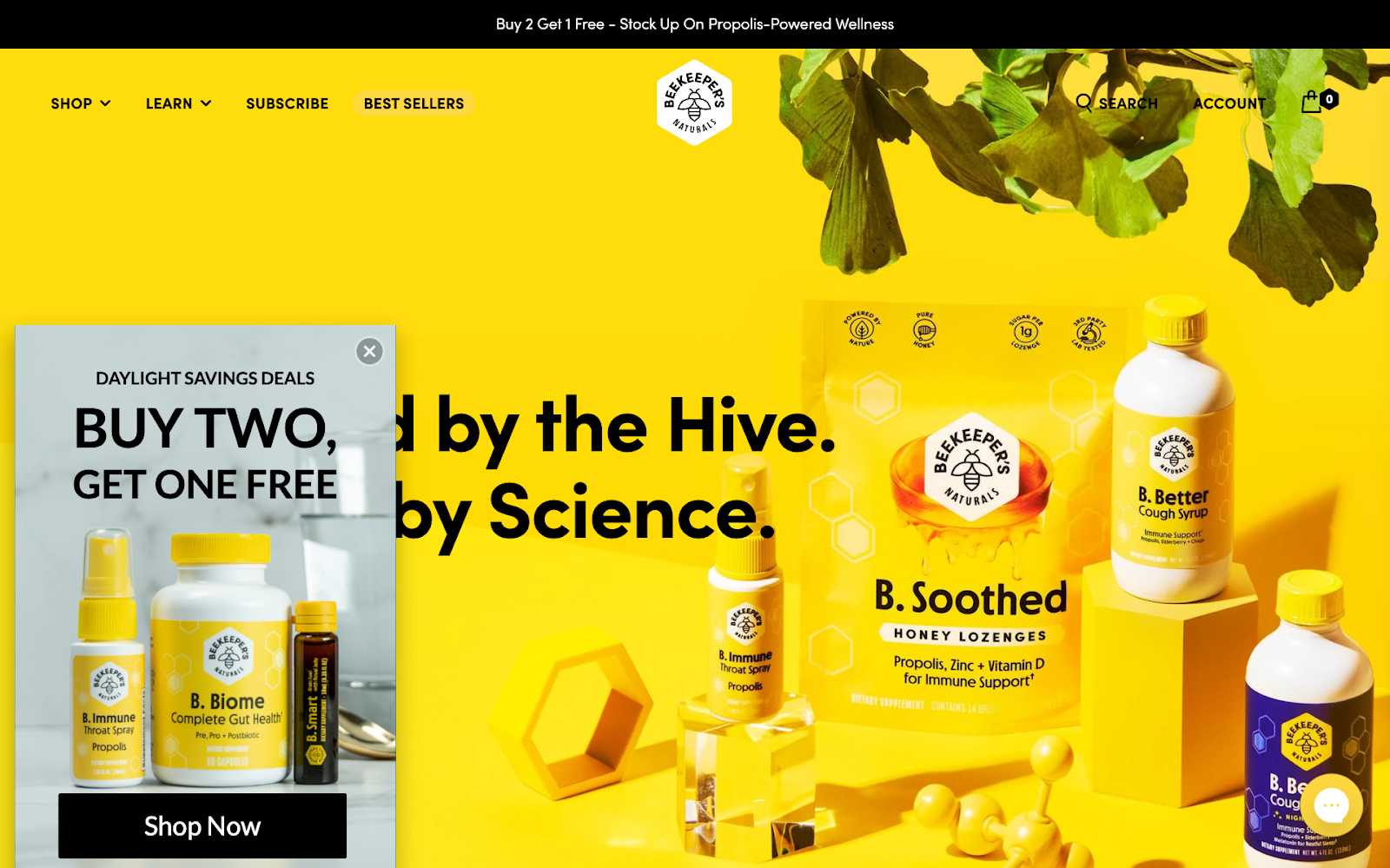

Run a popup campaign

One of the most tried and tested ways to improve website conversion rates is by running a popup campaign. In the example below, we can see Beekeeper’s Naturals running a flyout popup using Privy.

The good thing about popups is that they’re easy to design using Privy’s drag-and-drop editor. You can loop in all sorts of offerings to improve your conversion rate, including:

The good thing about popups is that they’re easy to design using Privy’s drag-and-drop editor. You can loop in all sorts of offerings to improve your conversion rate, including:

- Buy one, get one (BOGO)

- 15% off your first order

- Free shipping on orders $50 or more

- Free item after $100 order value

Add a cart saver to your checkout

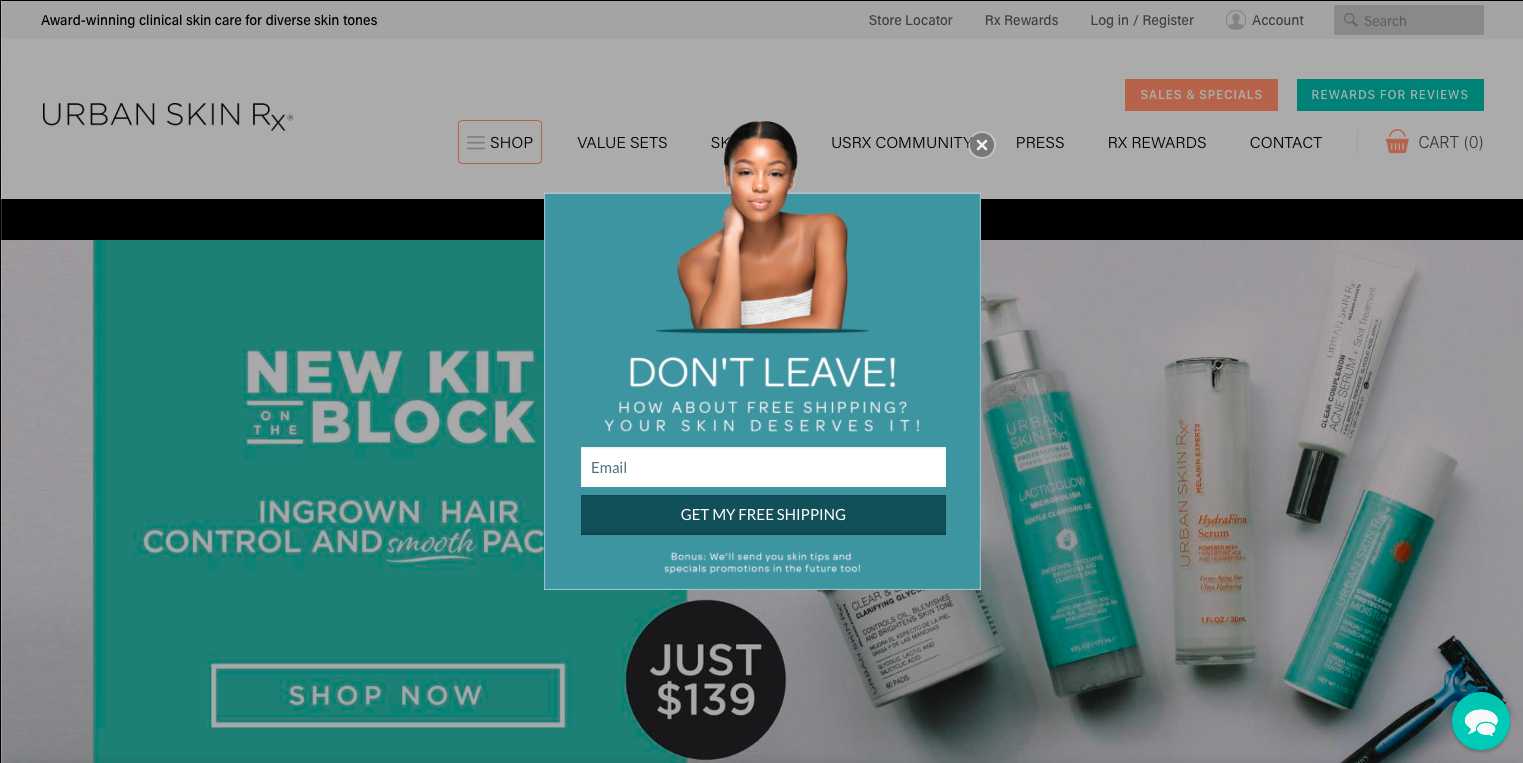

With a cart saver popup, you’ll not only improve your conversion rate, but you’ll recover more sales that might’ve exited your website. Below is an example of how Urban Skin Rx uses Privy to save abandoned carts.

There are two ways to run cart saver popups:

There are two ways to run cart saver popups:

- Gather someone’s email address on the popup and then send them a cart abandonment email that contains a coupon code. You’ll be able to target them for future email marketing campaigns, but this may lower your conversion rate.

- Reveal the coupon code on the popup right away. While you won’t get someone’s email address, this could increase your conversion rate.

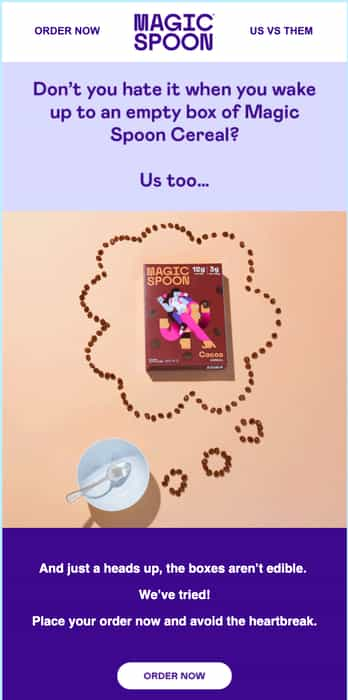

Send a customer winback email

If you have email contacts that have been dormant for a while, you can send them a friendly nudge with a customer winback email. Below is an example of how Magic Spoon re-engages customers in their email list.

Winback emails are common for brands who supply subscription boxes or rely on monthly order replenishments. They can even be automatically sent after setting a specific time frame in Privy.

Winback emails are common for brands who supply subscription boxes or rely on monthly order replenishments. They can even be automatically sent after setting a specific time frame in Privy.

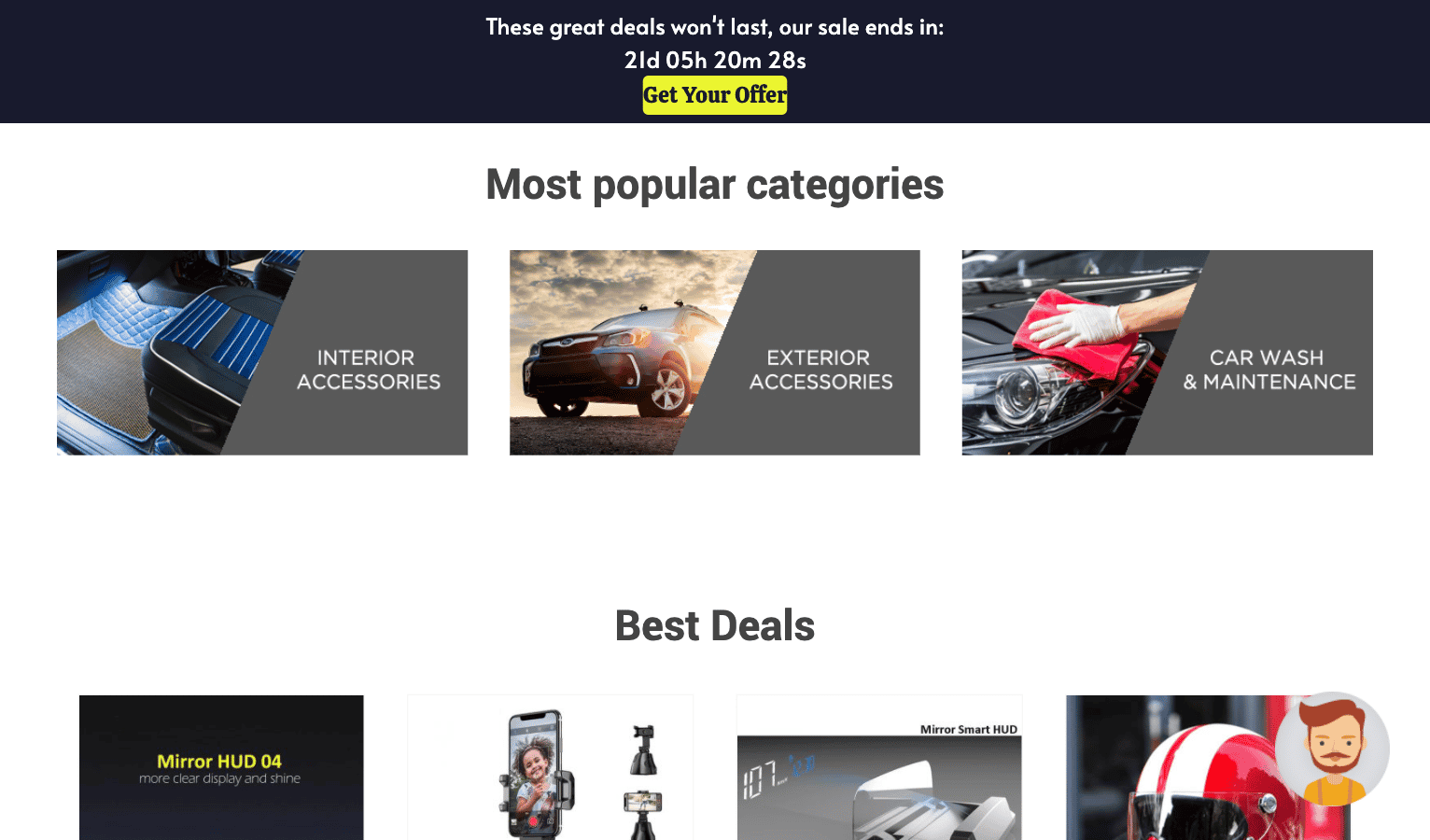

Add a countdown timer

Drive urgency the moment a store visitor lands on your website by adding a countdown timer at the top. The example below from Automotive Merch & Accessories shows what a 24-hour flash sale could look like using Privy.

Other tips for improving your conversion rate

Tighten up your copywriting

If your messaging on your popups or emails is confusing or long winded, you could be impacting your conversion rate. Tighten up your copywriting and focus on how you provide value to your customers.

Test your popup triggering

If you’re showing your popups too soon or have multiple running on the same page, this could impact your conversion rate. Test your popup triggering so that you’re not overwhelming customers and instead meeting them at the right moment.

Remove unnecessary form fields

If you require first name, last name, email address, and phone number in order to offer someone a discount, expect your conversion rate to be low. Remove as many unnecessary form fields as possible in your popups to improve conversion rate.

Add testimonials, ratings, and reviews

An estimated 95% of consumers read reviews before making a purchase. If you don’t have word-of-mouth marketing and star-ratings on your product listings, your conversion rate won’t be at its potential.

Your conversion rate could be better

In this article, we showed you how to calculate your conversion rate and laid out the conversion rate averages by industry. This gives you a good idea of what other brands in your space are doing.

We also provided a variety of ways to improve your conversion rate, like running Privy popup campaigns, cart savers, winback emails, and countdown timers.

You can also impact your conversion rate by writing better copy, testing your popup triggering, removing unnecessary form fields, and adding word-of-mouth marketing to your product pages. Now, get out there and start testing out ways to improve your conversion rate.

Subscribe for Updates

Get our best content on ecommerce marketing in your inbox 2 times a week.

Written by Devin Pickell

Subscribe for Updates

Get our best content on ecommerce marketing in your inbox 2 times a week.

.jpg)